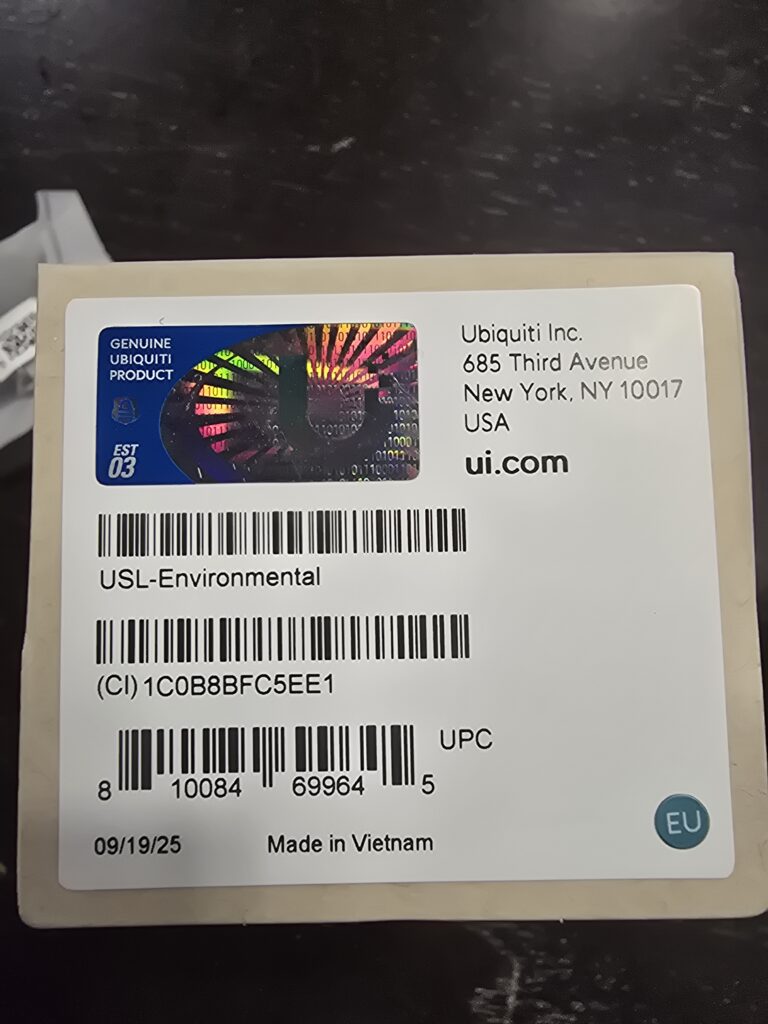

First off, lets start with a warning; If you order your SuperLink and Sensors from the Ubiquiti US store, it’s possible that you may get one of the units or in our case, all the sensors as the EU model and then a US SuperLink and well… they don’t work together. You can identify if you end up with an EU sensor or device via a small sticker on the box like this;

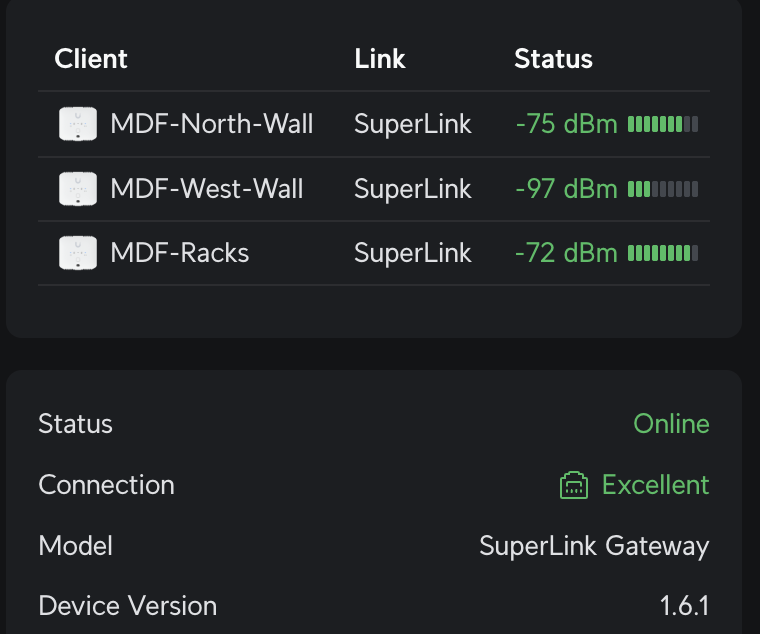

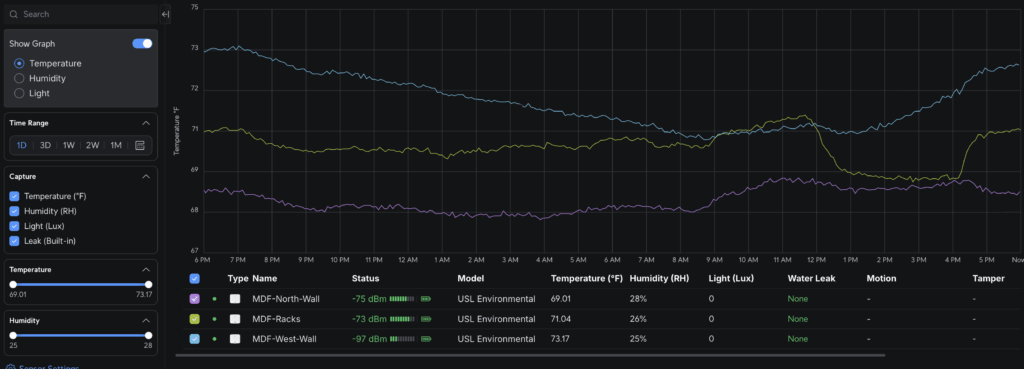

But once you get the right sensors – Kudos to UBNT for overnighting us replacements – they work very quickly, just pull the battery tab and they come up. So far so good, they’ve been pretty reliable connection wise for us and we’ve been testing them through concrete filled CMU w/ steel and we still have a reliable connection with reliable monitoring:

Overview: What is SuperLink?

The SuperLink platform from Ubiquiti is a new wireless sensor protocol & gateway ecosystem designed to integrate with the UniFi OS / UniFi Protect environment and deliver IoT sensor connectivity with enterprise-grade range, latency, and battery longevity.

Key technical highlights

- SuperLink is designed for multi-kilometre line-of-sight range, enabling large-scale deployments (industrial, commercial, smart-buildings) rather than just short-range BLE sensors.

- Ultra-low latency communications, tailored for security / alarm / automation sensor use-cases.

- Efficient power management: supports long battery life endpoints (key for sensors in remote/undisturbed locations).

- Integrated into UniFi OS: the gateway is adopted into UniFi Protect, which means your existing UniFi-based infrastructure (if you have one) can leverage the sensors and gateway.

- For deployments: the gateway supports dual radios – Bluetooth (for legacy BLE sensors) plus the proprietary sub-GHz SuperLink radio for the new sensors.

- In short: if you are managing facilities (data center racks, network closets, remote MSP sites) and you need environment-monitoring (temperature, humidity, water leak, light) with wide coverage and minimal wiring, the SuperLink + USL-Environmental combo offers an interesting path.